Will the promotion of ‘relationships’ with machines contribute to societal wellbeing and human flourishing, or provide new opportunities for manipulation and deception of the vulnerable?

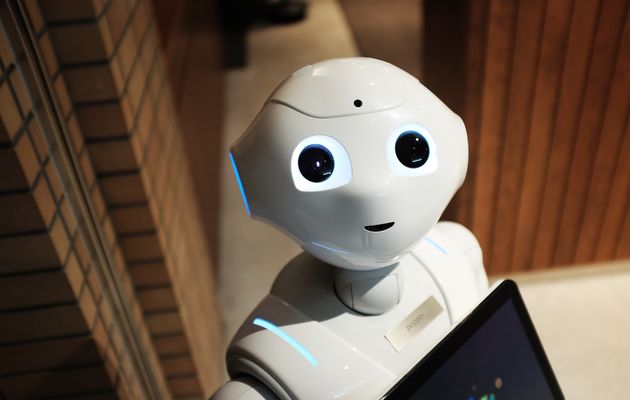

Photo: Alex Knight. Unsplash (CC0).

Photo: Alex Knight. Unsplash (CC0).

This is the first part of this Cambridge Paper published by Prof. John Wyatt with the Jubilee Centre.

SUMMARY

Interactions with apparently human-like and ‘emotionally intelligent’ AIs are likely to become commonplace within the next ten years, ranging from entirely disembodied agents like chatbots through to physical humanoid robots.

This will lead to new and troubling ethical, personal and legal dilemmas. Will the promotion of ‘relationships’ with machines contribute to societal wellbeing and human flourishing, or provide new opportunities for manipulation and deception of the vulnerable?

As biblical Christians we are called to safeguard and to celebrate the centrality of embodied human-to-human relationships, particularly in essential caring and therapeutic roles, and in our families and Christian communities.

INTRODUCTION

The 2013 movie Her constructs a near-future world in which Theodore, a young single man on the rebound, falls in love with Samantha. She’s cool, sassy, knowing and intimate.

But she is entirely virtual – an artificial intelligence in the cloud that remains in contact with Theodore wherever he goes, communicating by a small earbud. But perhaps the fictional world of Her is not so far away.

Eugenia Kuyda and Roman Mazurenko were tech entrepreneurs who developed a deep friendship carried out mainly online with text messages. But then, at the age of 22, Roman was killed in a road accident. Eugenia was devastated and went through what she called ‘a dark phase’.

Then she decided to build an artificially intelligent chatbot to replicate Roman’s personality, uploading all the text messages that Roman had sent over years. Eugenia found herself ‘sharing things with the bot that I wouldn’t necessarily tell Roman when he was alive. It was incredibly powerful…’

The AI chatbot Replika which resulted from this work is described as ‘a safe space for you to talk about yourself every day’. As Eugenia put it, ‘Those unconditional friendships and those relationships when we are being honest and being real… are so rare, and becoming rarer…so in some way the AI is helping you to open up and be more honest.’[1]

AI CHATBOTS

Hundreds of commercial companies around the world are developing AI chatbots and devices such as Amazon’s Alexa, Google Home and Apple’s Siri. The companies are engaged in an intense competition to have their devices present within every home, every workplace and every vehicle.

In 2018 Amazon reported that there were hundreds of thousands of developers and device makers building Alexa ‘experiences’, contributing to more than 70,000 ‘skills’ (individual topics that Alexa is able to converse about), and there are more than 28,000 different Alexa-connected smart devices now available.[2]

It seems likely that interactions with apparently human-like and ‘emotionally intelligent’ AIs will become commonplace within the next ten years. But how should we think of these ‘relationships’?

Can they play a helpful role for those grieving the loss of a loved one or those merely wishing to have an honest and self-disclosing conversation? Or could synthetic relationships with AIs somehow interfere with the messy process of real human-to-human interactions, and with our understanding of what it means to be a person?

CARE ROBOTS

Paro is a sophisticated AI-powered robot with sensors for touch, light, sound, temperature, and movement, designed to mimic the behaviour of a baby seal.[3]

In her book Alone Together Sherry Turkle reflects on an interaction between Miriam, an elderly woman living alone in a care facility, and Paro.[4] On this occasion Miriam is particularly depressed because of a difficult interaction with her son, and she believes that the robot is depressed as well.

She turns to Paro, strokes it again, and says, ‘Yes, you’re sad, aren’t you? It’s tough out there. Yes, it’s hard.’ In response Paro turns its head towards her and purrs approvingly.

Sherry Turkle writes ‘…in the moment of apparent connection between Miriam and her Paro, a moment that comforted her, the robot understood nothing. Miriam experienced an intimacy with another, but she was in fact alone…. We don’t seem to care what these artificial intelligences “know” or “understand” of the human moments we might “share” with them. …We are poised to attach to the inanimate without prejudice.’

MENTAL HEALTH APPLICATIONS

Synthetic ‘relationships’ are playing an increasingly important role in mental health monitoring and therapy. The delightfully named Woebot is a smartphone-based AI chatbot designed to provide cognitive behavioural therapy for people with depression or anxiety.[5]

It provides a text-based ‘digital therapist’ who responds immediately night or day. An obvious advantage of Woebot compared to a human therapist is that it’s constantly available. As one user put it, ‘The nice thing about something like Woebot is it’s there on your phone while you’re out there living your life.’

Alison Darcy, a clinical psychologist at Stanford University who created Woebot, argues that it cannot be a substitute for a relationship with a human therapist. But there is evidence that it can provide positive benefits for those with mental health concerns.

‘The Woebot experience doesn’t map onto what we know to be a human-to-computer relationship, and it doesn’t map onto what we know to be a human-to-human relationship either,’ Darcy said. ‘It seems to be something in the middle.’[6]

There is a common narrative which underpins the introduction of AI devices and ‘companions’ in many different fields of care, therapy and education. There are simply not enough skilled humans to fulfil the roles.

The needs for care across the planet are too great and they are projected to become ever greater. We have to find a technical solution to the lack of human carers, therapists and teachers. Machines therefore can be a ‘good enough’ replacement.

Some pro-AI enthusiasts go further, arguing that humans are frequently poorly trained, bored, fatigued, expensive and occasionally criminal. In contrast the new technological solution is available 24 hours a day.

It never becomes bored or inattentive. It is continuously updated and operating according to the latest guidelines and ethical codes. It can be multiplied and scaled indefinitely.

To many technologists machine carers will not only become essential, they will be superior to the humans that they replace! (Of course this technocentric narrative is highly misleading and will be discussed in greater detail below.)

As the technology continues to develop, supported by massive commercial funding, it seems likely that in the foreseeable future we will be confronted by a spectrum of different machines that offer simulated relationships, ranging from the entirely disembodied, like a chatbot, via an avatar or image on a screen, through to a physical humanoid robot.

SEX ROBOTS

The development of artificially intelligent sex robots provides another perspective for engaging in the complexities of human–machine relationships. Academic debate has focused on whether the use of sexbots by adults in private should be regulated and whether on balance they will be beneficial for humans.[7]

Classical libertarian arguments have been used by those in favour of robot sex, especially focusing attention on humans who suffer enforced sexual deprivation, including prisoners, the military, those forced to live in single-sex environments, and those with mental health or learning disabilities.

Again we find a version of the ‘good enough’ argument; sex with a machine may not be the same as with a loving human partner but it is better, so the argument goes, than being deprived of sexual activity altogether.

There is no doubt that the development of humanoid sex robots will lead to troubling ethical and regulatory dilemmas. Should the law permit humans to enact violent and abusive actions on humanoid robots who plead realistically for mercy? Should the use of child sex robots be outlawed?

These questions lie outside the scope of this paper, but they raise complex moral and legal questions of importance for secular thinkers as well as for Christians.[8]

Although libertarian arguments are already being employed to oppose legal restrictions on sex robots, in my own view, regulation and criminal sanctions will become necessary, not only to protect children but also adults from the potential harms of acting out violent and abusive behaviour with humanoid robots.

John Wyatt is Emeritus Professor of Neonatal Paediatrics, Ethics & Perinatology at University College London, and a senior researcher at the Faraday Institute for Science and Religion, Cambridge.

This paper was first published by the Jubilee Centre.

NOTES

[1] BBC Radio interview, 16 February 2018.

[2] https://developer.amazon.com/blogs/alexa/post/38bb01ef-ac9b-49ec-9e2c-fcb0b51a8b31/2018-highlights-for-alexa-skill-builders

[3] www.parorobots.com

[4] Sherry Turkle, Alone Together, Basic Books, 2011.

[5] See https://woebot.io

[6] Quoted in www.businessinsider.com/stanford-therapy-chatbot-app-depression-anxiety-woebot-2018-1?r=US&IR=T

[7] John Danaher and Neil McArthur (eds), Robot Sex: Social and Ethical Implications, MIT Press, 2017.

[8] Ibid.

Las opiniones vertidas por nuestros colaboradores se realizan a nivel personal, pudiendo coincidir o no con la postura de la dirección de Protestante Digital.

Si quieres comentar o