Meta trains its new Massively Multilingual Speech AI models with data from Bible recordings and makes them available on open access software.

![Meta headquarters. / [link]Meta [/link]](https://cms.evangelicalfocus.com/upload/imagenes/646e50587e86d_mthad.jpg) Meta headquarters. / [link]Meta [/link]

Meta headquarters. / [link]Meta [/link]

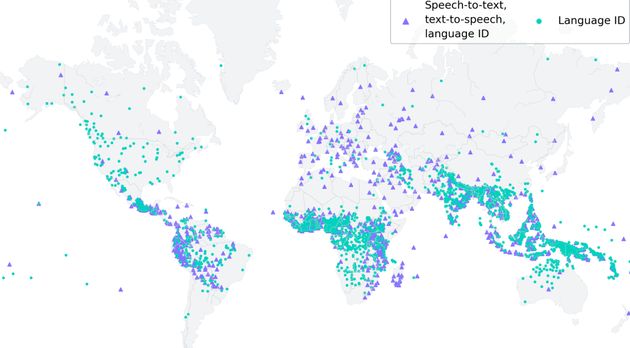

Meta has developed artificial intelligence models capable of identifying over 4,000 spoken languages, and recognise 1,100 of them spoken aloud, drawing on data from the Bible.

That means 40 times more than any known previous technology. “It’s a significant step toward preserving languages that are at risk of disappearing”, says the company behind Facebook and Instagram.

Their Massively Multilingual Speech (MMS) models aim to “make it easier for people to access information and use devices in their preferred language”.

Meta is making them available on open access software, so that “others in the research community can build upon our work” .

There are around 7,000 languages in the world, but existing speech recognition models cover only about 100 of them comprehensively.

To overcome this and train the model, they created two data sets with religious texts whose translations and audio recordings of people reading them are publicly available in different languages.

One of the sets contains audio recordings of the New Testament and its corresponding text taken from the internet in 1,107 languages, which provided on average 32 hours of data per language; the other contains unlabelled recordings of various other Christian religious readings.

However, they stresses that “while the content of the audio recordings is religious, our analysis shows that this doesn’t bias the model to produce more religious language”.

In the future, Meta hopes “to increase MMS’s coverage to support even more languages, and also tackle the challenge of handling dialects, which is often difficult for existing speech technology”.

Las opiniones vertidas por nuestros colaboradores se realizan a nivel personal, pudiendo coincidir o no con la postura de la dirección de Protestante Digital.

Si quieres comentar o